How to improve translation quality with smart terminology integration – a blc success story

Initial situation

When Machine Translation meets technical language

Machine translation (MT) is now part of everyday life in many companies – including the highly regulated pharmaceutical industry. But what happens when MT meets complex, product-specific terminology? When training your own models is no longer sufficient?

A European pharmaceutical company faced precisely this challenge: An extensive, well-maintained terminology database was available – but not linked to machine translation. The result is a lack of consistency, high post-editing effort and thus additional costs.

Together with blc, a concept was developed to integrate the existing terminology into the MT system via glossaries.

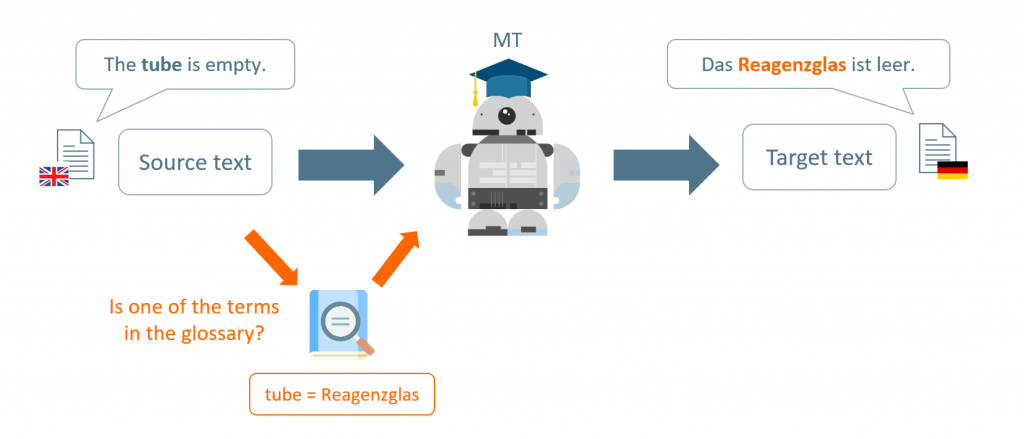

How do MT glossaries work?

An MT glossary is basically a word list of fixed translation assignments that the machine takes into account during the translation process. The system checks whether a word is included in the source text in the glossary and then automatically replaces it with the specified target language terminology, regardless of context. Unlike training an AI model that learns complex relationships using neural methods, the glossary intervenes rule-based and immediately: It overwrites the standard translation without (re-) training the model. This makes this method particularly suitable for keeping product or brand-specific terminology consistent – across different projects, languages and systems.

Approach

Termbase vs. glossary: Same same but different

While the terminology database works in a entry–oriented way, i.e. it combines several synonyms to form entries that then contain metadata and context information at different levels, an MT glossary needs term-oriented 1:1 mappings. This simplification is necessary so that MT can correctly recognize and use the technical terms in any context. However, this means that the existing terminology database cannot simply be stored unprocessed.

The process for creating glossaries

At the beginning of the project, the more than 60 language pairs were divided into language pair batches and prioritized. Batch by batch, blc worked its way through the terminology, following a repeatable process:

Best practices: What makes a good MT glossary

The following best practices have been proven for validation in the project:

- Keep it simple : The larger the glossary, the higher the computational effort for each translation request. The glossary should be as extensive as necessary, but kept as simple as possible.

- Avoid ambiguity : If a name is ambiguous, it should not be included in the glossary.

- Focus on suitable word types: Nouns, product names and acronyms are good, verbs or complex phrases are less suitable.

Terminology is dynamic – and so the glossaries must be

The highlight of the matter: terminology is alive. Products are changing, markets are being added, new terms are being created. In order for the MT glossaries to keep up with this, a maintenance process has been established that the customer can carry out himself. Deltas are regularly identified via a filter in the terminology database, validated and converted into new glossary versions.

Results

Measurably better translation quality

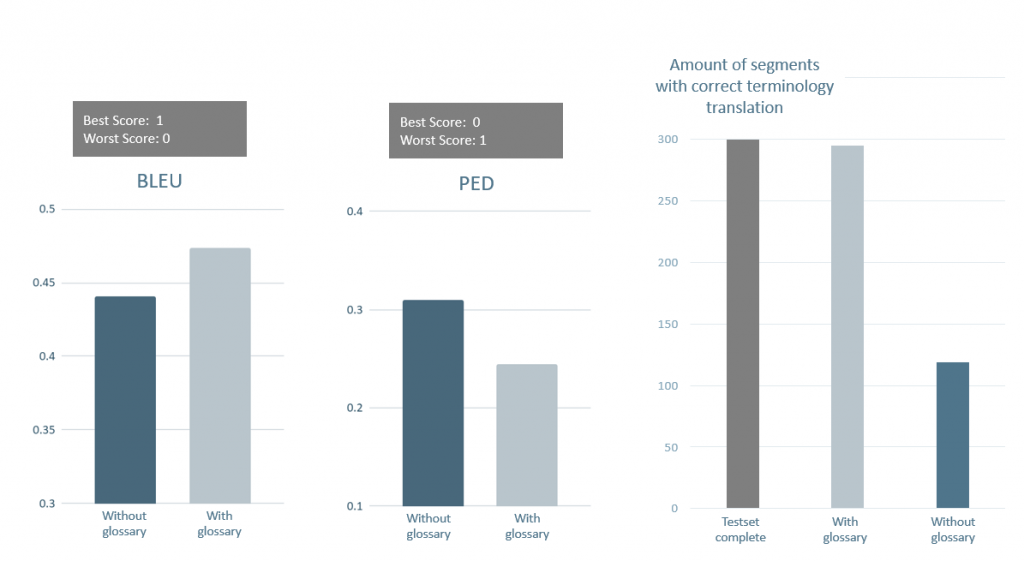

An evaluation for the English>German language direction showed how great the influence of the glossaries is.

300 sentences were translated once with, once without MT glossary – and then automatically and manually evaluated.

The result: When translating with MT glossary…

Outlook – what’s next?

Glossaries for 17 language directions are currently being used productively, with four more to follow by the end of the year. The next steps are already defined and in progress:

The existing terminology database is optimized so that the glossary validity and the required 1:1 equivalents can be mapped via the data model – despite the concept orientation.

This means that a direct API connection to the MT system will be possible in the future.

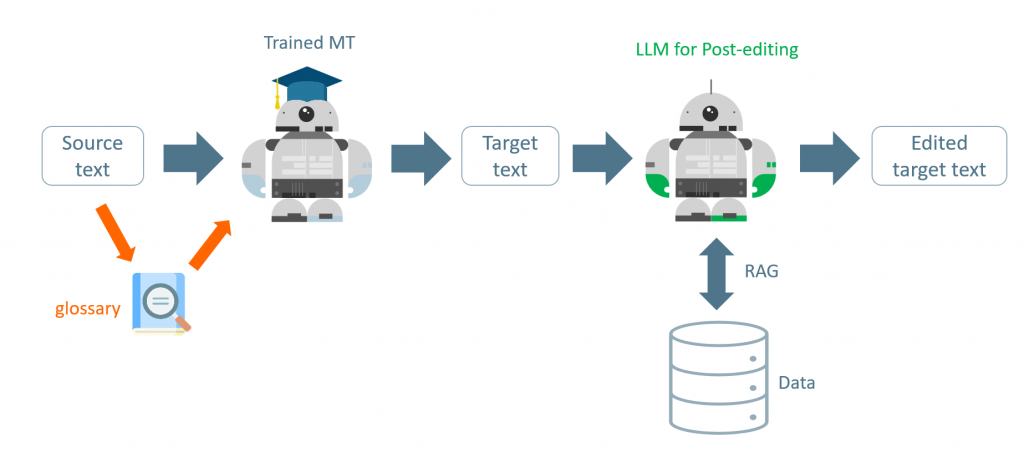

blc and the customer are already talking to the MT system manufacturer about this.Another potential for the future is the use of terminology for LLMs and RAG scenarios to provide AI systems with terminologically clean knowledge. In the long term, this can result in a combined approach of trained MT, glossaries and terminology database.

Conclusion: Terminology integration is a key success factor for the quality of (AI-supported) translations. By consistently using existing terminology resources, you can achieve more consistent results and more efficient translation processes.

Want to learn more about this success story? This use case will be presented at tcworld 2025. Come by or write to us directly!