Whenever machine translation (MT) components are introduced to a translation workflow, the system or engine must first prove itself in terms of quality and usability. To find out whether the engine meets all requirements, an initial series of data surveys is necessary. Only on such grounds it is possible to get an impression of quality or productivity growth. In today’s blog we explain how to measure the quality of automatic translations with the Dynamic Quality Framework (DQF) by TAUS.

The Use Case – Keeping Data In One Place

Imagine, you’re a translation manager and your task is to decide on the quality / productivity of a machine translation (MT) engine in use. Given a medium to large company or LSP, you would like to evaluate the relevant KPIs (edit time/distance, error types) in a simple fashion and in one place instead of aggregating single reports from all experts working in a translation project. To show how this could be done in practice, we came up with a basic setup, that enables the translation manager to keep track of all the data with the help of TAUS DQF.

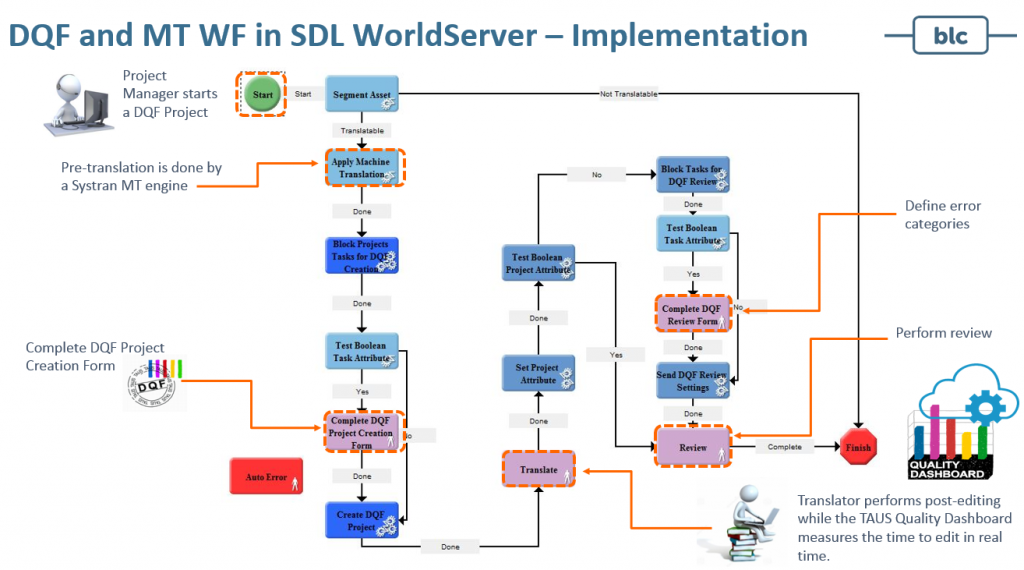

The systems applied in our UseCase (which we just recently presented at ETUG 2018) are WorldServer and Trados Studio (SDL), a Pure Neural MT Engine (SYSTRAN) and the DQF Plugin by TAUS. The roles involved are project manager, post-editor and reviewer. The following table shows the important workflow steps as well as the systems and roles involved:

Hands On – Setting Up The Workflow

Before the practical workflow can be used, a workflow must be defined in SDL WorldServer. To do this, the workflow steps contained in the TAUS DQF plug-in have to be arranged and added with a machine translation workflow step that requests automatic translation via the SYSTRAN API (see figure). The workflow steps ‚Complete DQF Project Creation Form‘ and ‚Complete DQF Review Form‘ are essential here. In the project creation step, the user defines project attributes which are used to create a new DQF project in an existing TAUS account. In the complete review form step, all error categories for the review step are predefined. This information is retrieved by SDL Studio at the time the translation package is imported and added as a DQF project. In this workflow a review on the post edited machine translation is mandatory, but you could also think of a workflow that decides on a review based on the amount of post-edits performed on the machine translation data.

You can also have a look at the workflow steps in detail in our slideshare presentation.

The Dashboard – Making Sense of The Data

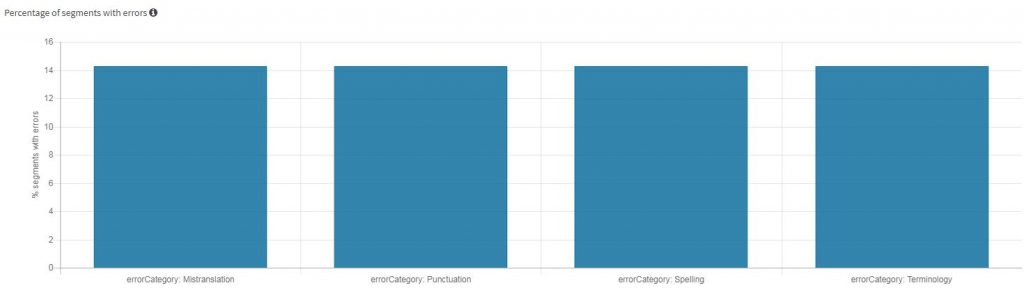

While post-editing and review take place, the project manager can view all results in the TAUS Quality Dashboard in real time as soon as the project is saved in SDL Studio. The dashboard has many ways to present data using a multitude of filters for cross referencing edit-data and error annotations, which enables project managers to decide on the overall quality of machine translation as well as productivity gains with machine translation and post-editing.

TAUS also enables users of machine translation to compare their engines for optimization via the TAUS DQF Tools.

Please contact Christian Eisold for any questions regarding this use-case or further questions about training, optimization and integration of machine translation.